📄 Robots.txt Generator

Build a precise robots.txt fast. Allow, disallow, add sitemap, and set crawl delay.

How to use

Last updated: 4 months ago

Step-by-Step Guide to Using the Robots.txt Generator

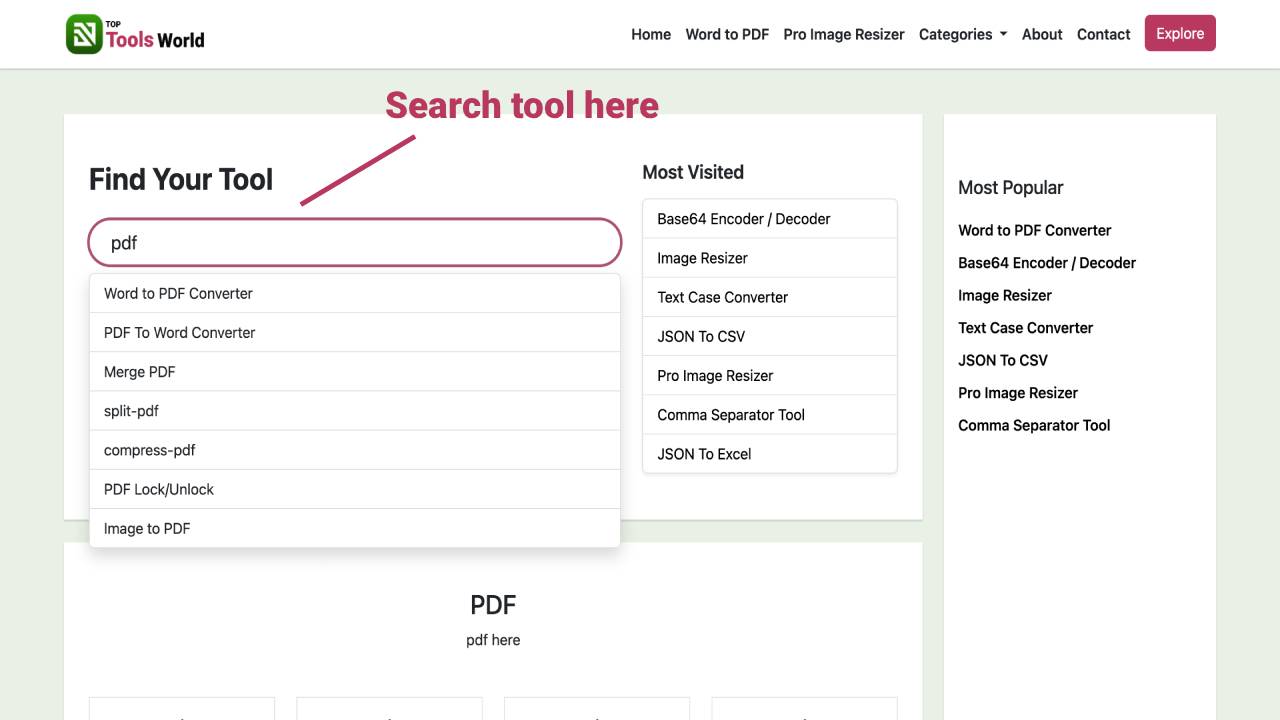

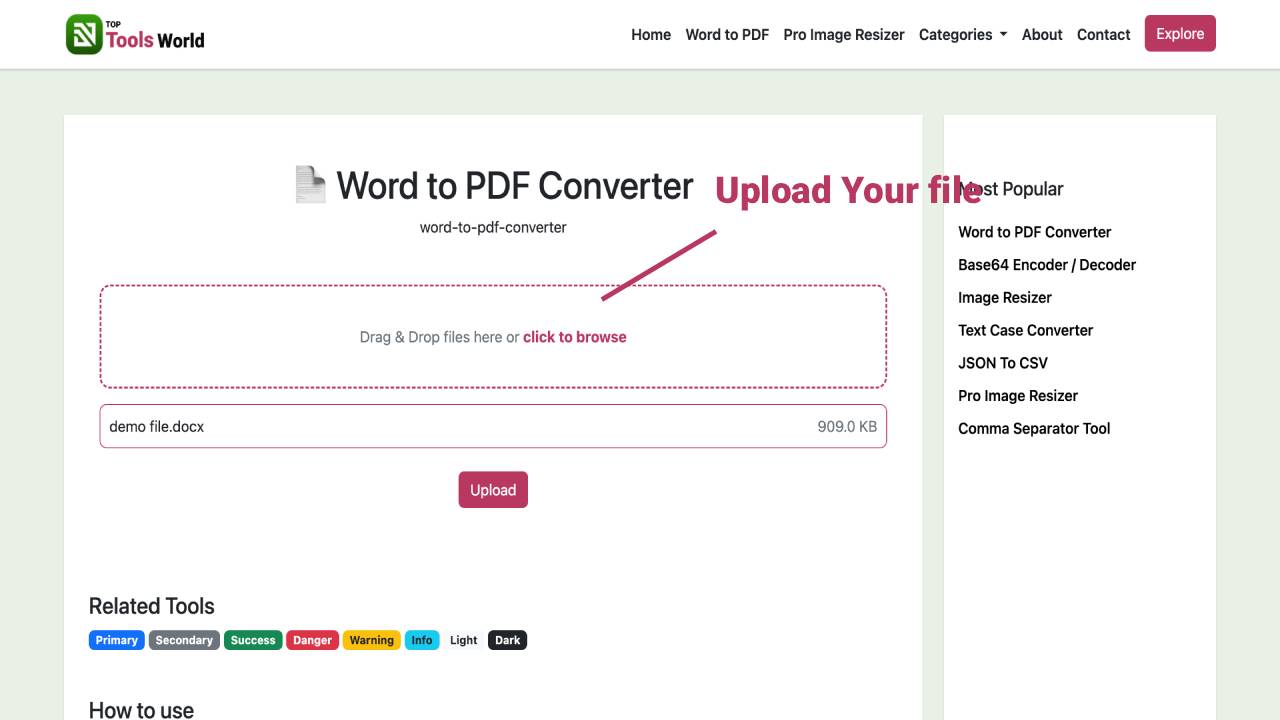

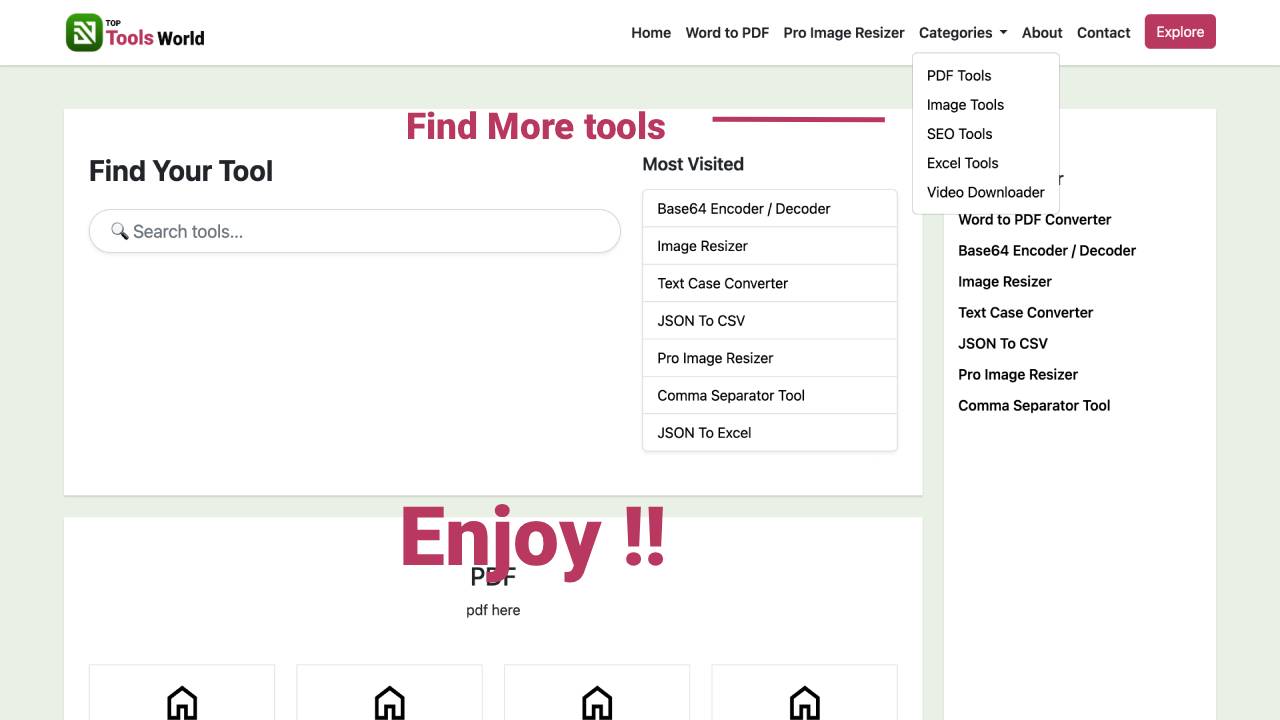

- Open the tool on Top Tools World and take a quick look at the simple layout. You will see clear spaces to set who the rules apply to, what to allow, what to block, and optional settings like sitemaps and crawl pace.

- Choose who you want the rules to apply to. If you want the same rules for every search engine bot, keep the default option. If you need rules for a specific crawler (like a single major search engine), type its name.

- List the areas of your site you want bots to access. This is perfect for public sections like images, blog posts, or product pages. Separate multiple entries with commas and keep the paths clean and consistent.

- Add any sections you want to block from crawling. Common examples include admin areas, account pages, filters, or duplicate content directories. Be specific so that important pages remain accessible.

- Paste your sitemap link to help search engines discover your content faster. If you have multiple sitemaps, you can include them as separate entries. This step boosts discovery and index coverage.

- Decide if you want to slow down crawling for all or some bots. Set a short delay if your server is under heavy load or if you want to smooth out crawl spikes. If you are unsure, you can leave this blank.

- Review your selections to ensure they reflect your intentions. Confirm that key areas are accessible and that sensitive or low-value sections are restricted. A quick review prevents costly indexing mistakes.

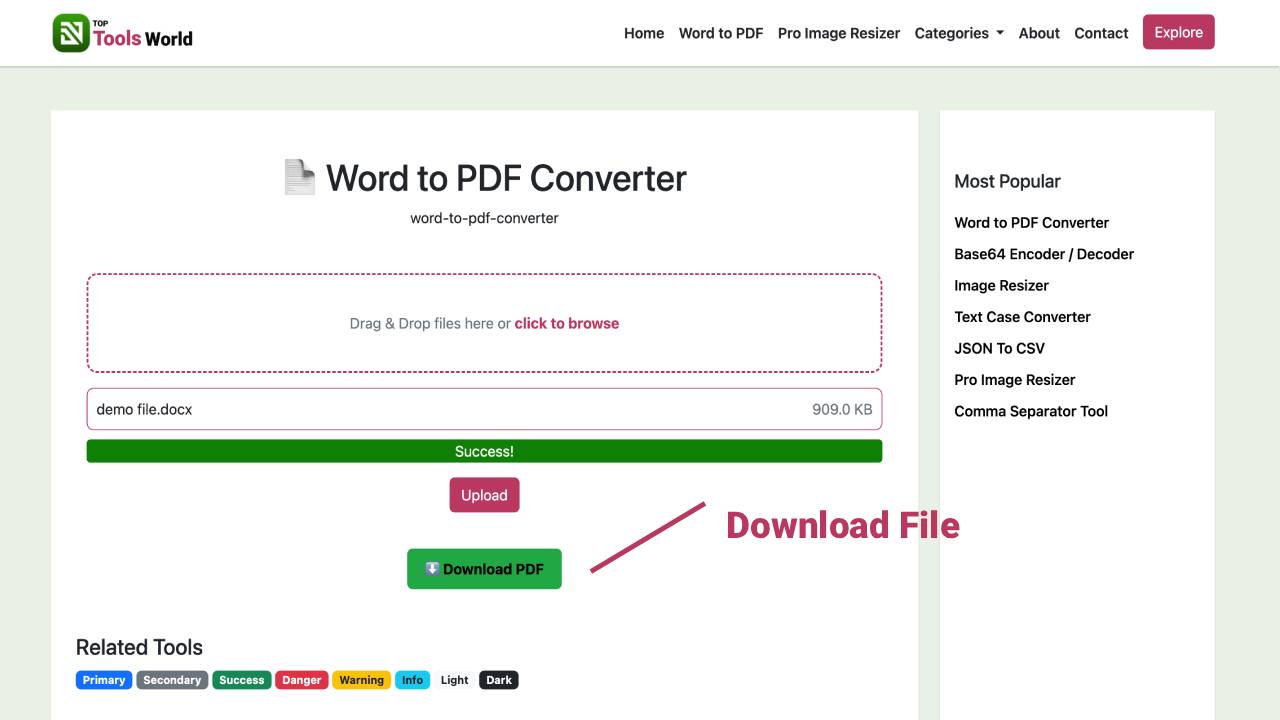

- Click to generate your robots.txt. In a second, you will see a formatted set of rules ready to use. Top Tools World presents it clearly, so you can scan and verify before you copy.

- Copy the output and place it at the root of your website (the standard public location). This is where search engines automatically look for the file when they visit your domain.

- Test and refine. Use a robots.txt tester or your preferred webmaster tools to confirm that the paths you meant to allow or block behave as expected. If needed, return to Top Tools World, adjust your inputs, and regenerate.

Key Benefits of Using Robots.txt Generator on Top Tools World

- Ease of use: The interface is streamlined so anyone can create a correct robots.txt without guesswork.

- Accuracy: Clean, standards-aligned output helps avoid indexing mistakes and crawler confusion.

- Speed: Generate a production-ready file in seconds, even for complex sites.

- Reliability: Built to reflect widely accepted search engine guidelines, reducing the risk of errors.

- Time-saving: No need to research syntax or memorize rules; simply choose what to allow or block.

- Flexible scenarios: Ideal for new sites, site migrations, seasonal campaigns, staging environments, or large catalogs.

- Clarity for teams: The generated rules are readable, making it easy for marketers, SEOs, and content managers to collaborate.

- Confidence: With Top Tools World, you get a clear, organized result you can trust.

Why Choose Our Robots.txt Generator? (Unique Advantages)

Top Tools World is designed for performance-focused marketing and SEO teams who want a quick, dependable way to control how crawlers interact with their sites. Our tool focuses on clarity, so you can make precise decisions without technical complexity.

- Superior experience: A thoughtful flow that guides you from strategy to a clean final file.

- Advanced options when you need them: Easily set different access rules, include sitemaps, and manage crawl pacing.

- Accuracy and performance: Outputs adhere to common crawler expectations to keep your indexing stable and predictable.

- Trust and reliability: Built by SEO specialists, tested across common site structures and use cases.

- Preferred by users: Marketers, SEOs, and site owners choose Top Tools World because it’s fast, clear, and frustration-free.

What is a Robots.txt Generator? A Complete Overview

A robots.txt generator helps you create the standard file that tells search engine crawlers which parts of your website they can or cannot access. This file sits at the root of your domain and acts as a signpost: it guides bots to public content, keeps them away from sensitive areas, and can point them to your sitemap. While it does not directly remove pages from search results, it controls crawling behavior, which influences how efficiently your site is discovered and indexed.

Here’s how it helps: imagine you run an online store. You want search engines to crawl product and category pages, but not internal search results, checkout, or account pages. You might also want to block filter parameters that cause duplicate content. With a generator, you can quickly specify what’s allowed and what’s off-limits, then include your sitemap so bots can find all important URLs quickly.

Common problems it solves include wasted crawl budget (bots spending time on low-value or duplicate pages), accidental exposure of private areas, and missed discovery due to a missing sitemap reference. Clear rules guide crawlers to your best content and away from dead-ends.

Practical examples include: allowing a public images folder so your visuals can appear in image search; disallowing an admin folder to prevent unnecessary crawling; and adding a sitemap link so all new blog posts and product pages are discovered faster. With Top Tools World, these choices are presented plainly, making it easy to get them right the first time.

Advanced Tips & Best Practices

- Start simple: Allow your main content sections and block only clearly non-public or low-value areas. You can refine later.

- Don’t block essential assets: Avoid blocking stylesheets, scripts, or important media folders that are needed for rendering.

- Be precise with paths: A small typo can unintentionally block or allow areas. Review your entries for leading slashes and consistent casing.

- Use crawl pacing sparingly: Only set a delay if your server struggles under load. Otherwise, let crawlers work at normal speed.

- Leverage sitemaps: Always include your sitemap link so bots can discover content quickly, especially for large sites.

- Protect staging and test areas: Keep non-public environments out of crawlers to avoid duplicate content and confusion.

- Revisit after site changes: If you restructure folders or launch new sections, update your robots.txt accordingly.

- Test critical paths: After publishing, use a robots.txt tester to confirm that key pages are accessible and private areas are blocked.

- Coordinate with canonical tags: Robots rules and canonical tags work best together to guide both crawling and consolidation.

- Document your intent: In team settings, keep a brief note about why sections are allowed or blocked to avoid future mistakes.

- Regenerate when needed: Return to Top Tools World any time you need to tweak your strategy and produce a fresh robots.txt.

FAQs About the Robots.txt Generator

1) What is robots.txt and why do I need it?

It’s a simple text file that tells search engine bots which parts of your site they can crawl. It helps protect sensitive areas, reduce duplicate crawling, and guide bots to your most valuable content.

2) Will robots.txt remove pages from search results?

No. It controls crawling, not indexing directly. To remove a page from search results, use proper noindex signals or removal tools. Robots.txt is for guiding crawler access.

3) Do I need a robots.txt for a small site?

Yes, it’s still useful. Even small sites benefit from clear crawling rules and a sitemap reference. Top Tools World makes it quick to set up.

4) Should I block CSS or JS files?

Generally no. Search engines need important assets to render pages correctly. Only block assets that are truly unnecessary for rendering.

5) Where should I place the file?

Put it at the root of your domain so crawlers can find it automatically (for example, yoursite.com/robots.txt).

6) What if I have multiple sitemaps?

You can include more than one sitemap reference. This is common for large sites with separate sitemaps for blogs, products, or images.

7) How often should I update robots.txt?

Update it whenever your site structure or crawling strategy changes. After updates, regenerate and publish using Top Tools World.

8) Can I set different rules for different bots?

Yes. You can tailor instructions for specific crawlers if you have unique needs, then use a general rule for all others.

Whether you’re launching a new site or refining an established one, the Robots.txt Generator from Top Tools World gives you a fast, reliable way to manage crawler access. Build confidence in your crawl strategy, save time, and keep your most valuable content front and center in search results with Top Tools World.